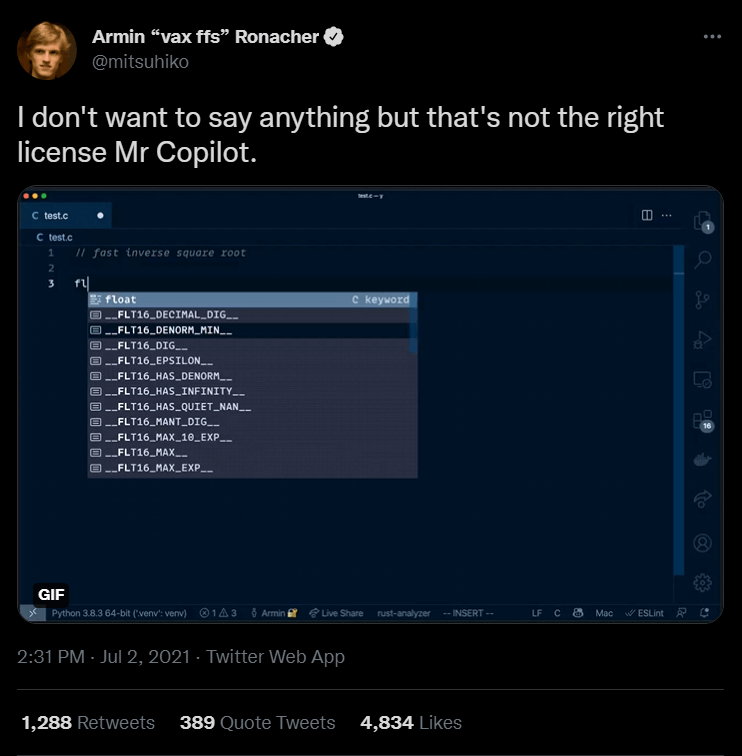

Copilot is a new code-generating tool from GitHub. GitHub Copilot is an AI pair programmer that assists you to write code quicker and with less work. It draws context from comments and code and recommends individual lines and entire functions instantly. GitHub Copilot is powered by OpenAI Codex, a new AI system created by OpenAI. The GitHub Copilot technical preview is available as a Visual Studio Code extension.

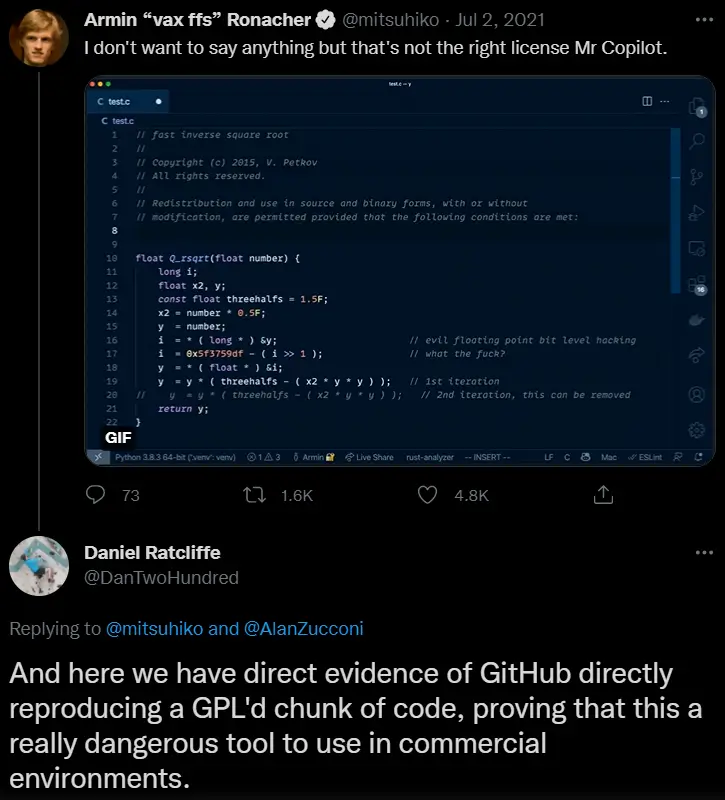

Armin Ronacher was experimenting with Copilot. Armin Ronacher is a prominent developer from the open-source community. When Copilot started to produce code. The lines, extracted from the source code of the 1999 video game Quake III, are infamous among programmers—a combo of little tricks that add up to some pretty basic math, imprecisely. The original Quake coders knew they were hacking. “What the fuck,” one commented in the code beside an especially egregious shortcut.

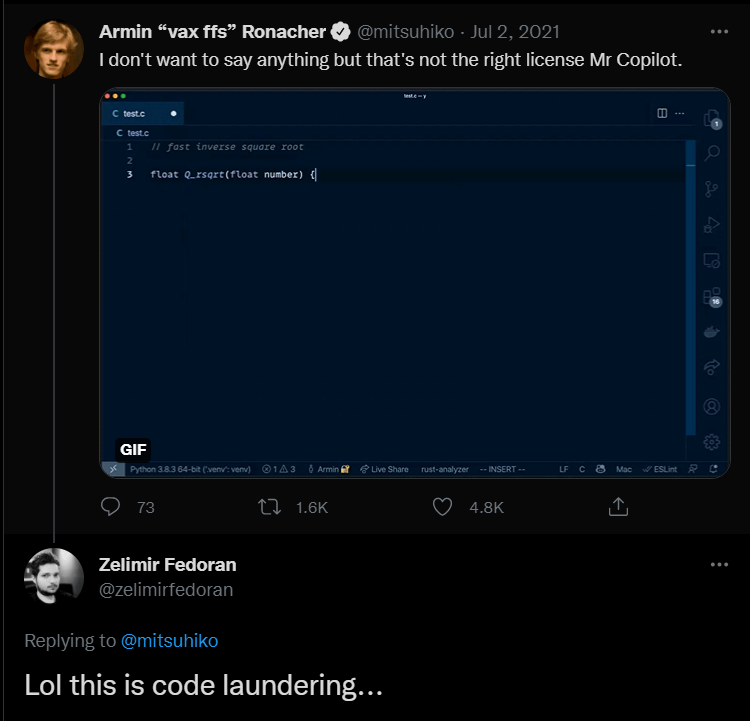

So it was strange for Ronacher to see such code produced by Copilot, an artificial intelligence tool that is marketed to produce code that is both novel and efficient. The AI was plagiarizing—copying the hack (including the profane comment) verbatim. Worse yet, the code it had chosen to copy was under copyright protection.

Do You Know About This: GitHub Copilot Is ‘Unacceptable And Unjust’ Says Free Software Foundation

Ronacher posted a screenshot to Twitter, where it was entered as evidence in a roiling trial-by-social-media over whether Copilot is exploiting programmers’ labor.

Copilot, which GitHub describes as “your AI pair programmer,” is the outcome of a collaboration with OpenAI, the earlier nonprofit research lab known for powerful language-generating AI models such as GPT-3. At its heart is a neural network that is trained using massive volumes of data. Instead of text, though, Copilot’s source material is code: millions of lines uploaded by the 65 million users of GitHub, the world’s most extensive platform for developers to collaborate and share their work. GitHub, which was purchased by Microsoft in 2018, plans to sell access to the tool to developers.

The purpose is for Copilot to learn enough about the patterns in that code that it can do some hacking itself. It can take the incomplete code of a human partner and finish the job. For the most part, it appears successful at doing so.

To various programmers, Copilot is interesting because coding is difficult. While AI can instantly create photo-realistic faces and write credible essays in response to prompts, code has been mostly untouched by those advancements.

An AI-written text that reads surprisingly might be embraced as “creative,” but code offers less margin for error. A bug is a bug, and it indicates the code could have a security hole or a memory leak, or more likely that it just won’t work. But writing accurate code also demands a balance. The system can’t just repeat the exact code from the data utilized to train it, particularly if such code is protected by copyright. That’s not AI code generation; that’s plagiarism.

Do You Know About This: GitHub Copilot Generated Insecure Code In 40% Of Circumstances During Experiment

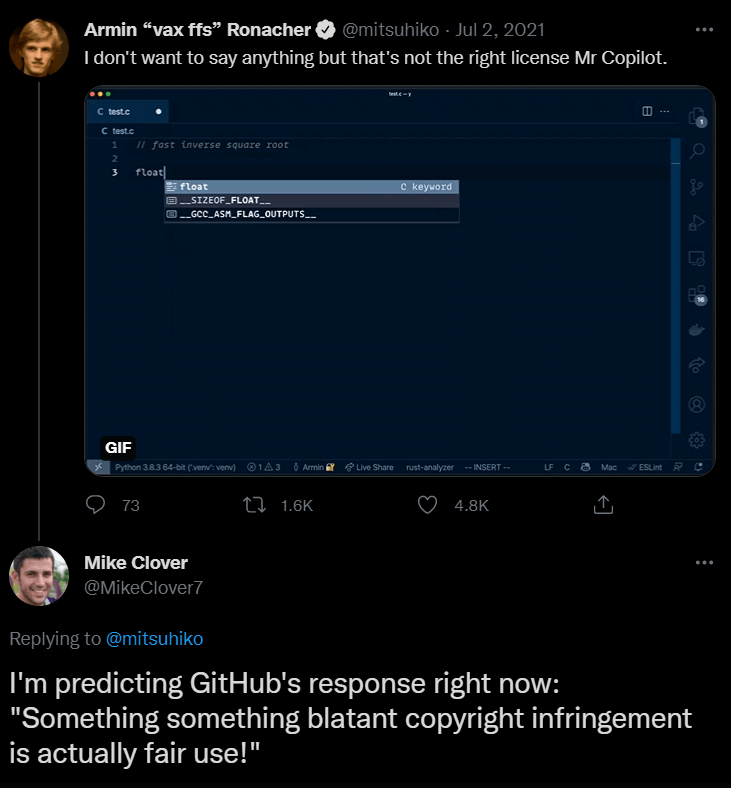

Soon thereafter, netizens on Twitter started giving their opinions!

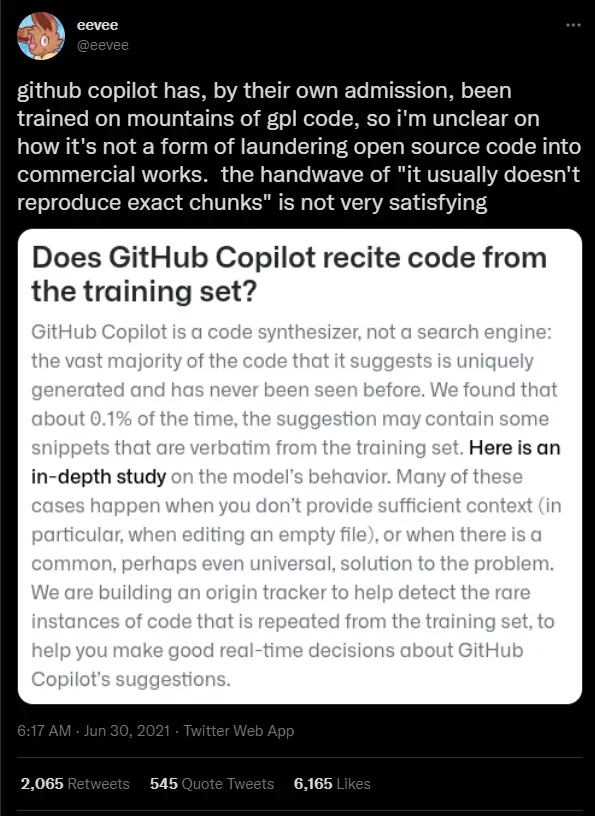

GitHub says Copilot’s slip-ups are only rare, but critics assert the blind copying of code is less of an issue than what it exposes about AI systems generally: Even if code is not copied directly, should it have been used to train the model in the first place? GitHub has been unclear about specifically which code was included in training Copilot, but it has explained its position on the principles as the discussion over the tool has unfolded: All publicly open code is fair game despite its copyright.

This hasn’t convened well among some GitHub users who say the tool both depends on their code and neglects their hopes for how it will be utilized. The company has reaped both free-to-use and copyrighted code and “put it all in a blender in order to sell the slurry to commercial and proprietary interests,” says Evelyn Woods, a Colorado-based programmer and game designer whose tweets on the topic went viral. “It feels like it’s laughing in the face of open source.”

“I’m generally happy to see expansions of free use, but I’m a little bitter when they end up benefiting massive corporations who are extracting value from smaller authors’ work en masse,” Woods says.

Do You Know About This: Developer Gets Suspended After Intentionally Damaging GitHub

AI tools bring industrial scale and automation to an old tension at the heart of open source programming: Coders want to share their work freely under permissive licenses, but they worry that the chief beneficiaries will be large businesses that have the scale to profit from it. A corporation takes a young startup’s free-to-use code to corner a market or uses an open-source library without helping with maintenance. Code-generating AI systems that rely on large data sets mean everyone’s code is potentially subject to reuse for commercial applications.

“You only really find out when you throw it out into the world and people use and abuse it,” says Colin Raffel, a professor of computer science at the University of North Carolina who co-authored a preprint (not yet peer-reviewed) examining similar copying in OpenAI’s GPT-2. Given that, he was astonished to see that GitHub and OpenAI had chosen to train their model with code that came with copyright restrictions.

Ronacher, the open-source developer, adds that most of Copilot’s copying seems to be almost harmless—situations where simple solutions to problems come up again and again, or quirks like the infamous Quake code, which has been (improperly) copied by people into many different codebases. “You can make Copilot trigger hilarious things,” he says. “If it’s used as intended I think it will be less of an issue.”

GitHub has also shown it has a potential solution in the works: a way to flag those verbatim outputs when they occur so that programmers and their lawyers know not to reuse them commercially. But creating such a system is not as easy as it sounds, Raffel notes, and it takes at the bigger problem: What if the output is not verbatim, but a near copy of the training data? What if only the variables have been changed, or a single line has been expressed in a different way? In other words, how much change is required for the system to no longer be a copycat? With code-generating software in its infancy, the legal and ethical boundaries aren’t yet clear.

GitHub refused to respond to questions about Copilot. In a series of posts on Hacker News, GitHub CEO Nat Friedman reacted to the developer outrage by projecting confidence about the fair use designation of training data, pointing to an OpenAI position paper on the topic. GitHub was “eager to participate” in coming debates over AI and intellectual property, he wrote.

Ronacher states that he expects advocates of free software to defend Copilot. But it’s unclear if the tool will spark meaningful legal challenges that simplify the fair use issues anytime soon. He already uses permissive licenses whenever he can in the hopes that other developers will pluck out whatever is useful, and Copilot could help automate that sharing process. “An engineer shouldn’t waste two hours of their life implementing a function I’ve already done,” he says.

But Ronacher can see the challenges. “If you’ve spent your life doing something, you expect something for it,” he says.